AI is firmly embedded in cybersecurity. Attend any cybersecurity conference, event, or trade show and AI is invariably the single biggest capability focus. Cybersecurity providers from across the spectrum make a point of highlighting that their products and services include AI. Ultimately, the cybersecurity industry is sending a clear message that AI is an integral part of any effective cyber defense.

With this level of AI universality, it’s easy to assume that AI is always the answer, and that it always delivers better cybersecurity outcomes. The reality, of course, is not so clear cut.

This report explores the use of AI in cybersecurity, with particular focus on generative AI. It provides insights into AI adoption, desired benefits, and levels of risk awareness based on findings from a vendor-agnostic survey of 400 IT and cybersecurity leaders working in small and mid-sized organizations (50-3,000 employees). It also reveals a major blind spot when it comes to the use of AI in cyber defenses.

The survey findings offer a real-world benchmark for organizations reviewing their own cyber defense strategies. They also provide a timely reminder of the risks associated with AI to help organizations take advantage of AI safely and securely to enhance their cybersecurity posture.

AI terminology

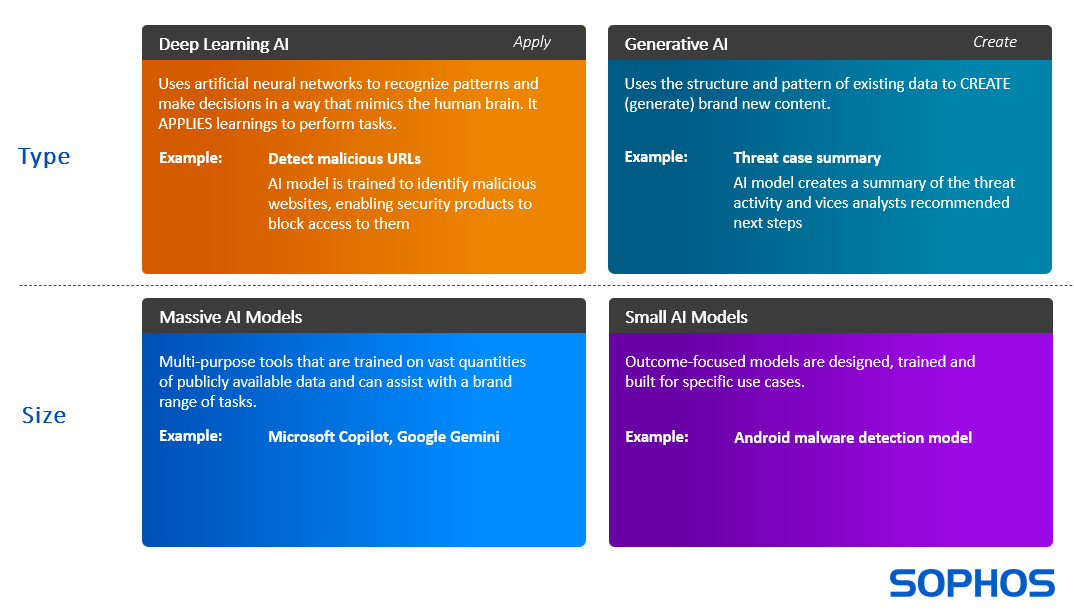

AI is a short acronym that covers a range of capabilities that can support and accelerate cybersecurity in many ways. Two common AI approaches used in cybersecurity are deep learning models and generative AI.

- Deep learning (DL) models APPLY learnings to perform tasks. For example, appropriately trained DL models can identify if a file is malicious or benign in a fraction of a second without ever having seen that file before.

- Generative AI (GenAI) models assimilate inputs and use them to CREATE (generate) new content. For example, to accelerate security operations, GenAI can create a natural language summary of threat activity to date and recommend next steps for the analyst to take.

AI is not “one size fits all” and models vary greatly in size.

- Massive Models, such as Microsoft Copilot and Google Gemini, are large language models (LLMs) trained on a very extensive set of data that can perform a wide range of tasks.

- Small models are typically designed and trained on a very specific data set to perform a single task, such as to detect malicious URLs or executables.

AI adoption for cybersecurity

The survey reveals that AI is already widely embedded in the cybersecurity infrastructure of most organizations, with 98% saying they use it in some capacity:

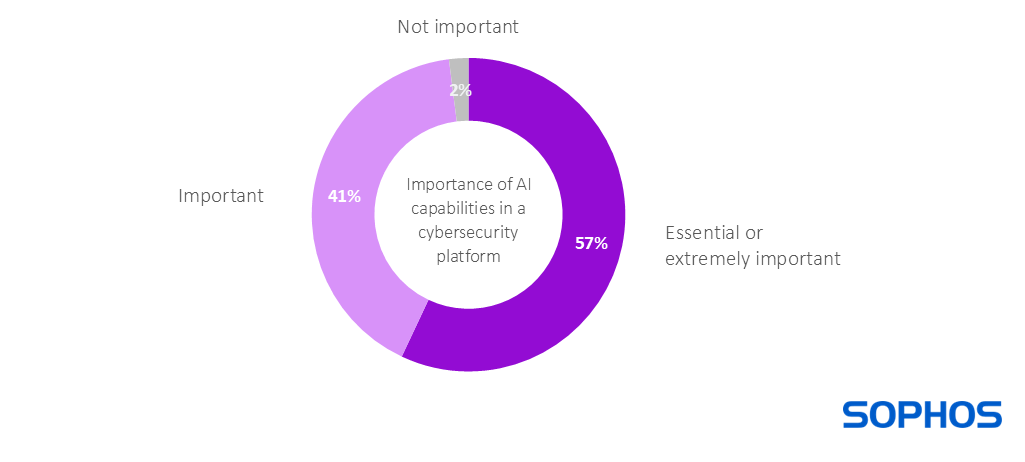

AI adoption is likely to become near universal within a short time frame, with AI capabilities now on the requirements list of 99% (with rounding) of organizations when selecting a cybersecurity platform:

With this level of adoption and future usage, understanding the risks and associated mitigations for AI in cybersecurity is a priority for organizations of all sizes and business focus.

GenAI expectations

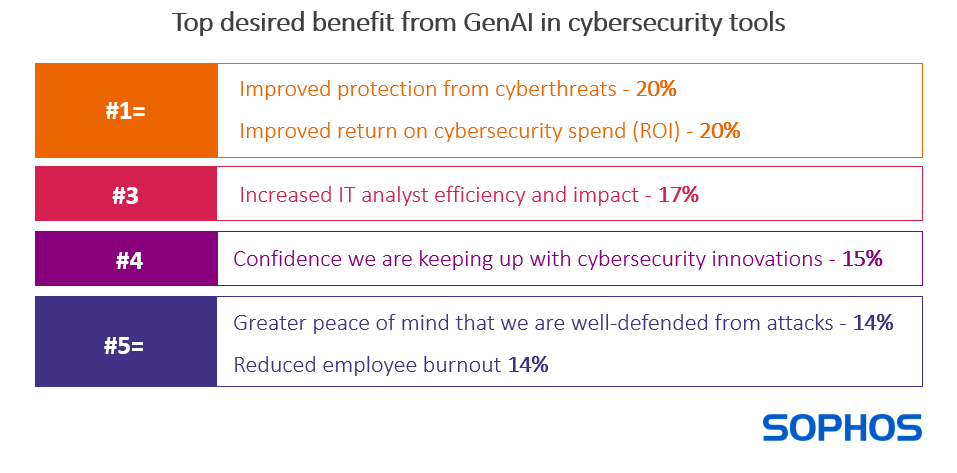

The saturation of GenAI messaging across both cybersecurity and people’s broader business and personal lives has resulted in high expectations for how this technology can enhance cybersecurity outcomes. The survey revealed the top benefit that organizations want genAI capabilities in cybersecurity tools to deliver, as shown below.

The broad spread of responses reveals that there is no single, standout desired benefit from GenAI in cybersecurity. At the same time, the most common desired gains relate to improved cyber protection or business performance (both financial and operational). The data also suggests that the inclusion of GenAI capabilities in cybersecurity solutions delivers peace of mind and confidence that an organization is keeping up with the latest protection capabilities.

The positioning of reduced employee burnout at the bottom of the ranking suggests that organizations are less aware of or less concerned about the potential for GenAI to support users. With cybersecurity staff in short supply, reducing attrition is an important area for focus and one where AI can help.

Desired GenAI benefits change with organization size

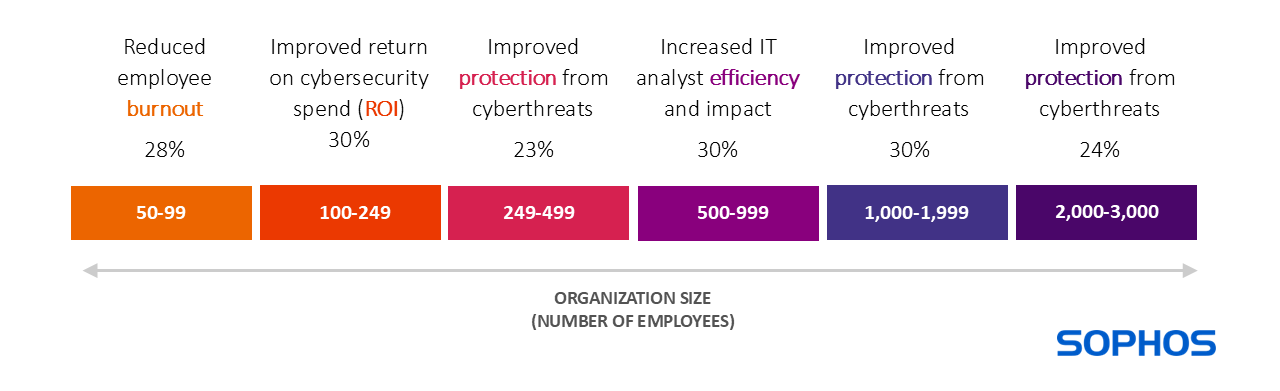

The #1 desired benefit from GenAI in cybersecurity tools varies as organizations increase in size, likely reflecting their differing challenges.

Although reducing employee burnout ranked lowest overall, it was the top desired gain for small businesses with 50-99 employees. This may be because the impact of employee absence disproportionately impacts smaller organizations who are less likely to have other staff who can step in and cover.

Conversely, highlighting their need for tight financial rigor, organizations with 100-249 employees prioritize improved return on cybersecurity spend. Larger organizations with 1,000-3,000 employees most value improved protection from cyberthreats.

About the survey

Sophos commissioned independent research specialist Vanson Bourne to survey 400 IT security decision makers in organizations with between 50 and 3,000 employees during November 2024. All respondents worked in the private or charity/not-for-profit sector and currently use endpoint security solutions from 19 separate vendors and 14 MDR providers.

Sophos’ AI-powered cyber defenses

Sophos has been pushing the boundaries of AI-driven cybersecurity for nearly a decade. AI technologies and human cybersecurity expertise work together to stop the broadest range of threats, wherever they run. AI capabilities are embedded across Sophos products and services and delivered through the largest AI-native platform in the industry. To learn more about Sophos’ AI-powered cyber defenses visit www.sophos.com/ai

Source: Sophos